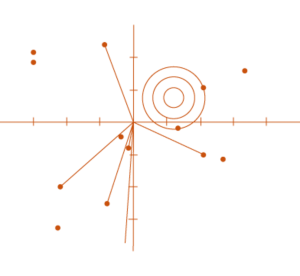

Since the column vectors β j are orthonormal, β i Proof: The first statement results from Theorem 1 Symmetric Matrices as explained above. Property 1: If λ 1 ≥ … ≥ λ k are the eigenvalues of Σ with corresponding unit eigenvectors β 1, …, β k, then Alternatively, the spectral theorem can be expressed as Where β is a k × k matrix whose columns are unit eigenvectors β 1, …, β k corresponding to the eigenvalues λ 1, …, λ k of Σ and D is the k × k diagonal matrix whose main diagonal consists of λ 1, …, λ k. Since the covariance matrix is symmetric, by Theorem 1 of Symmetric Matrices, it follows that We find such coefficients β ij using the Spectral Decomposition Theorem (Theorem 1 of Linear Algebra Background). Observation: Our objective is to choose values for the regression coefficients β ij so as to maximize var(y i) subject to the constraint that cov(y i, y j) = 0 for all i ≠ j. population variances and covariances of the y i are given by Then the covariance matrix for Y is given by Observation: Let Σ = be the k × k population covariance matrix for X. Thus,įor reasons that will be become apparent shortly, we choose to view the rows of β as column vectors β i, and so the rows themselves are the transpose. Now define the k × k coefficient matrix β = whose rows are the 1 × k vectors =.

Since each y iis a linear combination of the x j, Y is a random vector. We now define a k × 1 vector Y = , where for each i the ith principal component of X isįor some regression coefficients β ij. Principal component analysis is a statistical technique that is used to analyze the interrelationships among a large number of variables and to explain these variables in terms of a smaller number of variables, called principal components, with a minimum loss of information.ĭefinition 1: Let X = be any k × 1 random vector.

0 kommentar(er)

0 kommentar(er)